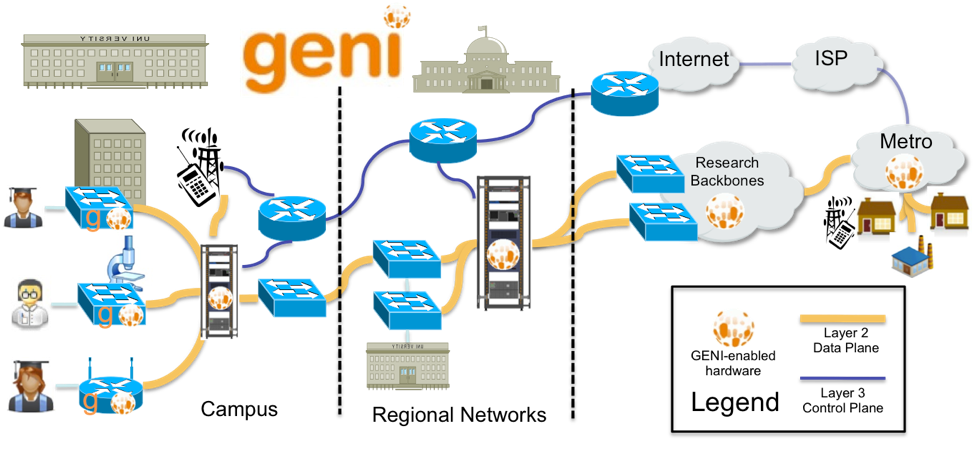

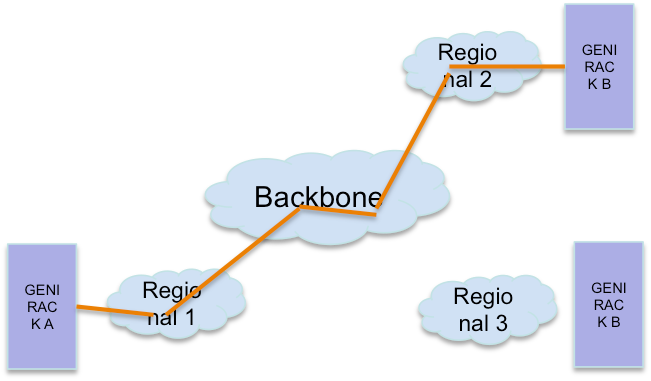

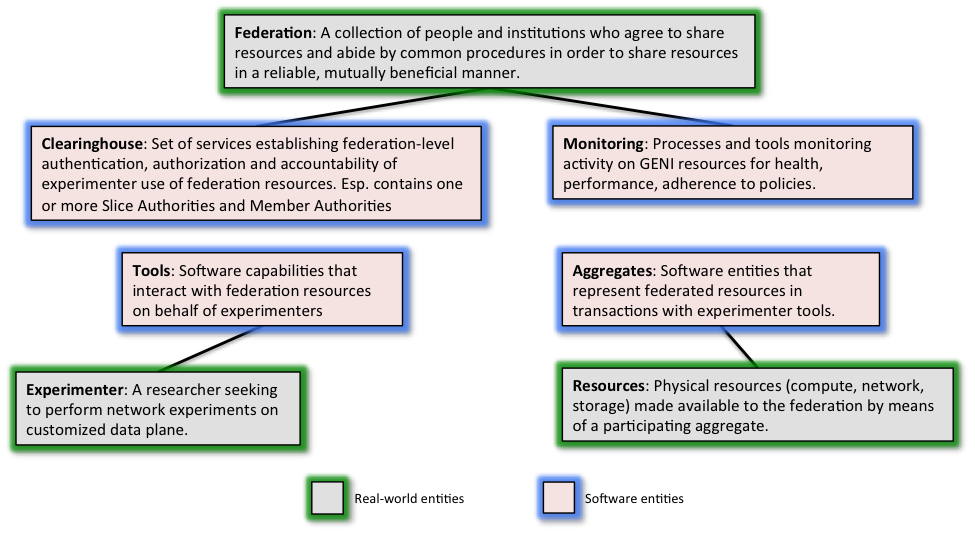

The GENI Network architecture is designed to allow: The diagram below shows the GENI network architecture. It consists of: Slicing the network. GENI network links are sliced by Ethernet VLANs i.e. multiple experiments sharing the same physical link are given different VLANs on that link. Slicing by VLAN guarantees traffic isolation among experiments (one slice in GENI cannot see packets in another slice) and also provides best-effort performance isolation. Deep programmability. GENI allows programmers to control how packets are forwarded within their experiment. Network federation and stitching. GENI is a federated testbed i.e. different organizations host GENI resources and make them available to GENI experimenters. This include network resources that are owned by regional and national R&E network providers and by campuses hosting GENI racks or wireless base stations. Setting up the data plane for a GENI experiment may therefore require coordination among multiple network resource providers. This is done by a process called GENI Stitching. The figure below illustrates the coordination needed to stitch a Layer 2 link from an experimenter’s compute resource on one rack (Rack A) to a compute resource on another rack (Rack B). This stitching requires provisioning of VLANs at the regional networks that Racks A and B connect to and at the national backbone network. Each of these networks needs to allocate and provision a VLAN and they all need to ensure traffic can be exchanged between the VLAN they allocated and the VLAN allocated by their neighboring network. For details on GENI stitching see the Stitching page on the GENI wiki. GENI wireless networks. GENI wireless base stations have backhaul connections to a local GENI rack and connect through the rack to the rest of the GENI network. This is shown in the GENI network architecture diagram at the top of this page. Connecting campus resources to GENI. The best way to connect a campus resources such as a scientific instrument or a research lab to GENI is through the dataplane switch on a GENI rack. This is illustrated in the figure at the top of this page. GENI is composed of a broad set of heterogeneous resources, each owned and operated by different entities (called aggregate providers). For example, a campus hosting a GENI rack is an aggregate provider. Likewise, a R&E network that provides network connectivity for the GENI data plane network (see Network Architecture) is also an aggregate provider. By joining the GENI federation, these aggregate providers make their resources available to GENI experimenters while still maintaining a degree of control and trust that these resources will be used in a responsible and secure manner. Similarly, GENI experimenters trust aggregate providers to provide the resources promised them and enforce any resource isolation guaranteed by the aggregate. There are simply too many experimenters and aggregate providers to allow everyone to know everyone and approve every resource-related translation. A scalable trust architecture is therefore needed to ensure that the interests of the aggregate providers and the experimenters are protected. What is needed is a trusted third party that can vouch for the proper operations of resources (for the experimenters) and for the credentials of the experimenters (for the aggregate providers). This trusted third party is the GENI Federation. It establishes common notions of identity, authentication, authorization and accountability to allow all participations in the GENI federation to enter into resource related transactions in a trusted manner. Resource owners and experimenters and federations are real people or groups: GENI establishes software services to represent their interests in these transactions. The following figure shows these real-world entities and their virtual representatives in the GENI Federation Architecture.